Pixels, voxels, and views: A study of shape representations for single view 3D object shape prediction

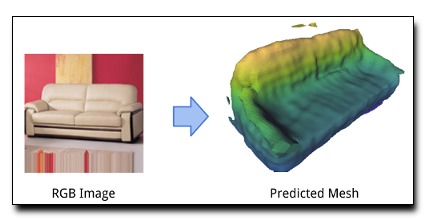

The goal of this paper is to compare surface-based and volumetric 3D object shape representations, as well as viewer-centered and object-centered reference frames for single-view 3D shape prediction. We propose a new algorithm for predicting depth maps from multiple viewpoints, with a single depth or RGB image as input. By modifying the network and the way models are evaluated, we can directly compare the merits of voxels vs. surfaces and viewer-centered vs. object-centered for familiar vs. unfamiliar objects, as predicted from RGB or depth images. Among our findings, we show that surface-based methods outperform voxel representations for objects from novel classes and produce higher resolution outputs. We also find that using viewer-centered coordinates is advantageous for novel objects, while object-centered representations are better for more familiar objects. Interestingly, the coordinate frame significantly affects the shape representation learned, with object-centered placing more importance on implicitly recognizing the object category and viewer-centered producing shape representations with less dependence on category recognition.

Download: pdf

Text Reference

Daeyun Shin, Charless Fowlkes, and Derek Hoiem.

Pixels, voxels, and views: a study of shape representations for single view 3d object shape prediction.

In

IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2018.

BibTeX Reference

@inproceedings{ShinFH_CVPR_2018,

author = "Shin, Daeyun and Fowlkes, Charless and Hoiem, Derek",

booktitle = "IEEE Conference on Computer Vision and Pattern Recognition (CVPR)",

title = "Pixels, voxels, and views: A study of shape representations for single view 3D object shape prediction",

year = "2018",

tag = "geometry"

}