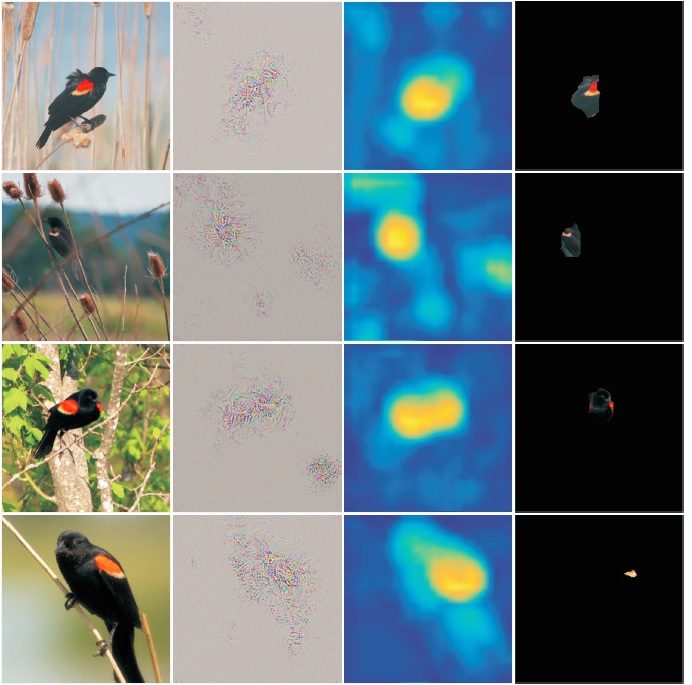

Pooling second-order local feature statistics to form a high-dimensional bilinear feature has been shown to achieve state-of-the-art performance on a variety of fine-grained classification tasks. To address the computational demands of high feature dimensionality, we propose to represent the covariance features as a matrix and apply a low-rank bilinear classifier. The resulting classifier can be evaluated without explicitly computing the bilinear feature map which allows for a large reduction in the compute time as well as decreasing the effective number of parameters to be learned.

To further compress the model, we propose classifier co-decomposition that factorizes the collection of bilinear classifiers into a common factor and compact per-class terms. The co-decomposition idea can be deployed through two convolutional layers and trained in an end-to-end architecture. We suggest a simple yet effective initialization that avoids explicitly first training and factorizing the larger bilinear classifiers. Through extensive experiments, we show that our model achieves state-of-the-art performance on several public datasets for fine-grained classification trained with only category labels. Importantly, our final model is an order of magnitude smaller than the recently proposed compact bilinear model, and three orders smaller than the standard bilinear CNN model.

Download: pdf

Text Reference

Shu Kong and Charless C. Fowlkes.

Low-rank bilinear pooling for fine-grained classification.

In

IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2017.

BibTeX Reference

@inproceedings{KongF_CVPR_2017,

author = "Kong, Shu and Fowlkes, Charless C.",

booktitle = "IEEE Conference on Computer Vision and Pattern Recognition (CVPR)",

title = "Low-rank Bilinear Pooling for Fine-grained Classification",

year = "2017",

tag = "object_recognition,fine_grained,grouping"

}