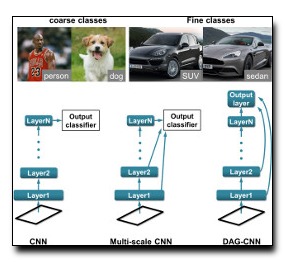

We explore multi-scale convolutional neural nets (CNNs)

for image classification. Contemporary approaches extract

features from a single output layer. By extracting features

from multiple layers, one can simultaneously reason about

high, mid, and low-level features during classification. The

resulting multi-scale architecture can itself be seen as a

feed-forward model that is structured as a directed acyclic

graph (DAG-CNNs). We use DAG-CNNs to learn a set of

multiscale features that can be effectively shared between

coarse and fine-grained classification tasks. While finetuning

such models helps performance, we show that even

“off-the-self” multiscale features perform quite well. We

present extensive analysis and demonstrate state-of-the-art

classification performance on three standard scene benchmarks

(SUN397, MIT67, and Scene15). In terms of the

heavily benchmarked MIT67 and Scene15 datasets, our results

reduce the lowest previously-reported error by 23.9%

and 9.5%, respectively.

Download: pdf

Text Reference

Songfan Yang and Deva Ramanan.

Multi-scale recognition with dag-cnns.

In

IEEE International Conference on Computer Vision. 2015.

BibTeX Reference

@INPROCEEDINGS{YangR_ICCV_2015,

author = "Yang, Songfan and Ramanan, Deva",

booktitle = "IEEE International Conference on Computer Vision",

title = "Multi-scale recognition with DAG-CNNs",

year = "2015",

tag = "object_recognition"

}