Datasets for training object recognition systems are steadily growing in size.

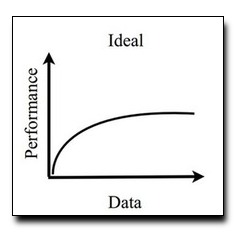

This paper investigates the question of whether existing detectors will

continue to improve as data grows, or if models are close

to saturating due to limited model complexity and the Bayes risk associated

with the feature spaces in which they operate. We

focus on the popular paradigm of scanning-window templates defined on

oriented gradient features, trained with discriminative classifiers. We

investigate the performance of mixtures of templates as a function of

the number of templates (complexity) and the amount of training data. We find that

additional data does help, but only with correct regularization and treatment

of noisy examples or "outliers" in the training data. Surprisingly, the

performance of problem domain-agnostic mixture models appears to saturate

quickly (~10 templates and ~100 positive training examples per template).

However, compositional mixtures (implemented via composed parts) give

much better performance because they share parameters among templates,

and can synthesize new templates not encountered during training. This suggests there is still room to

improve performance with linear classifiers and the existing feature space

by improved representations and learning algorithms.

PASCAL VOC 10x

[dataset]

Download: pdf

Text Reference

Xiangxin Zhu, Carl Vondrick, Deva Ramanan, and Charless C. Fowlkes.

Do we need more training data or better models for object detection?

In

British Machine Vision Conference (BMVC). 2012.

BibTeX Reference

@inproceedings{ZhuVRF_BMVC_2012,

author = "Zhu, Xiangxin and Vondrick, Carl and Ramanan, Deva and Fowlkes, Charless C.",

title = "Do we need more training data or better models for object detection?",

booktitle = "British Machine Vision Conference (BMVC)",

year = "2012"

}