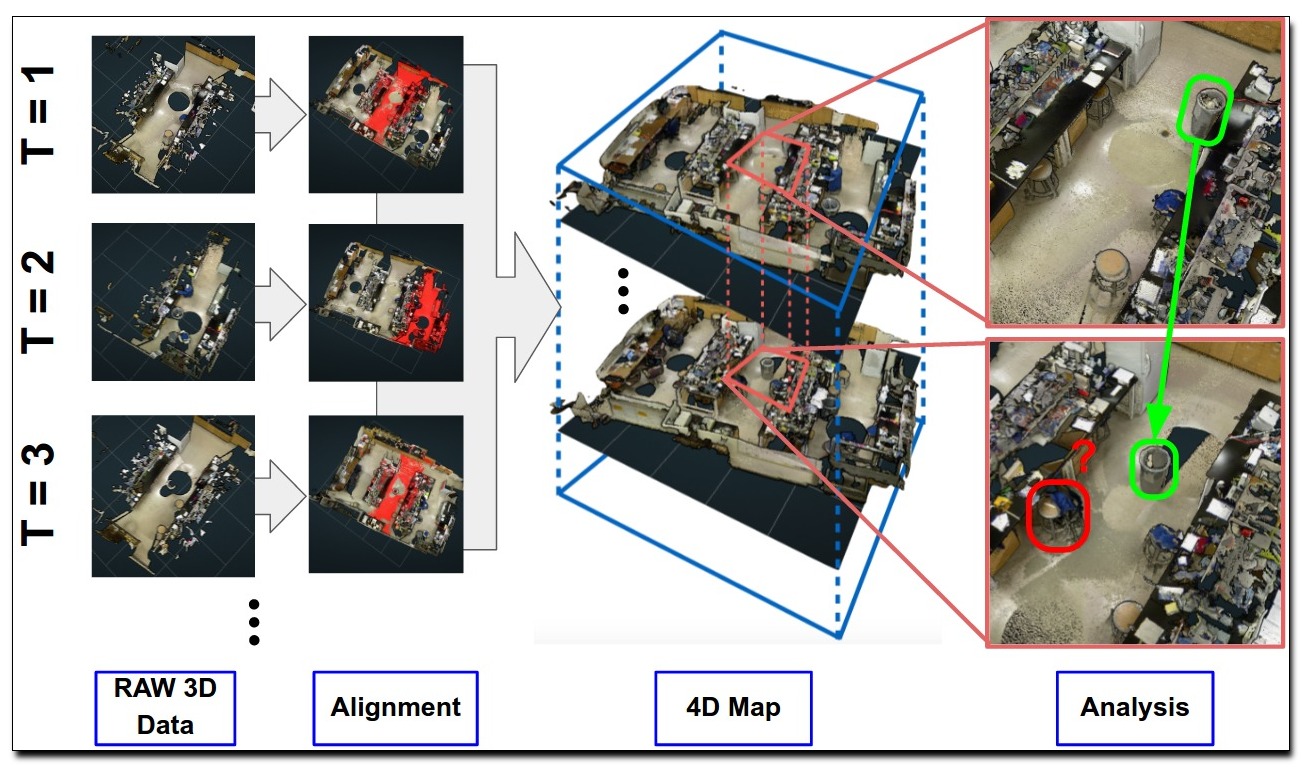

This paper addresses the problem of building a spatio-temporal model of the

world from a stream of time-stamped data. Unlike traditional models for

simultaneous localization and mapping (SLAM) and structure-from-motion (SfM)

which focus on recovering a single rigid 3D model, we tackle the problem of

mapping scenes in which dynamic components appear, move and disappear

independently of each other over time. We introduce a simple generative

probabilistic model of 4D structure which specifies location, spatial and

temporal extent of rigid surface patches by local Gaussian mixtures. We fit

this model to a time-stamped stream of input data using

expectation-maximization to estimate the model structure parameters (mapping)

and the alignment of the input data to the model (localization). By explicitly

representing the temporal extent and observability of surfaces in a scene, our

method yields superior localization and reconstruction relative to baselines

that assume a static 3D scene. We carry out experiments on both synthetic RGB-D

data streams as well as challenging real-world datasets, tracking scene

dynamics in a human workspace over the course of several weeks.

Download: pdf

Text Reference

Minhaeng Lee and Charless C. Fowlkes.

Space-time localization and mapping.

In

IEEE International Conference on Computer Vision. 2017.

BibTeX Reference

@INPROCEEDINGS{LeeF_ICCV_2017,

author = "Lee, Minhaeng and Fowlkes, Charless C.",

booktitle = "IEEE International Conference on Computer Vision",

title = "Space-Time Localization and Mapping",

year = "2017",

tag = "geometry"

}