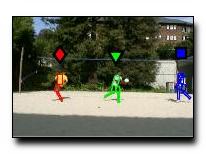

We describe a tracker that can track moving people in long sequences

without manual initialization. Moving people are modeled with the

assumption that, while configuration can vary quite substantially from

frame to frame, appearance does not. This leads to an algorithm that firstly builds a model of the

appearance of the body of each individual by clustering candidate body segments, and then uses this model to find all individuals in each frame. Unusually, the tracker does

not rely on a model of human dynamics to identify possible instances of

people; such models are unreliable, because human motion is fast and

large accelerations are common. We show our tracking algorithm can be interpreted

as a loopy inference procedure on an underlying Bayes net. Experiments on video of real scenes

demonstrate that this tracker can (a) count distinct individuals; (b)

identify and track them; (c) recover when it loses track, for example, if

individuals are occluded or briefly leave the view; (d) identify the

configuration of the body largely correctly; and (e) is not dependent on

particular models of human motion.

Download: pdf

Text Reference

Deva Ramanan and D.A. Forsyth.

Finding and tracking people from the bottom up.

In

CVPR, II: 467–474. 2003.

BibTeX Reference

@inproceedings{RamananF_CVPR_2003,

AUTHOR = "Ramanan, Deva and Forsyth, D.A.",

TAG = "people",

TITLE = "Finding and tracking people from the bottom up",

BOOKTITLE = "CVPR",

YEAR = "2003",

PAGES = "II: 467-474",

BIBSOURCE = "http://www.visionbib.com/bibliography/motion-f736.html#TT53065"

}